When human observers look at an image, attentive mechanisms drive their gazes towards salient regions. Emulating such ability has been studied for more than 80 years by neuroscientists and by computer vision researchers, while only recently, thanks to the large spread of deep learning, saliency prediction models have achieved a considerable improvement.

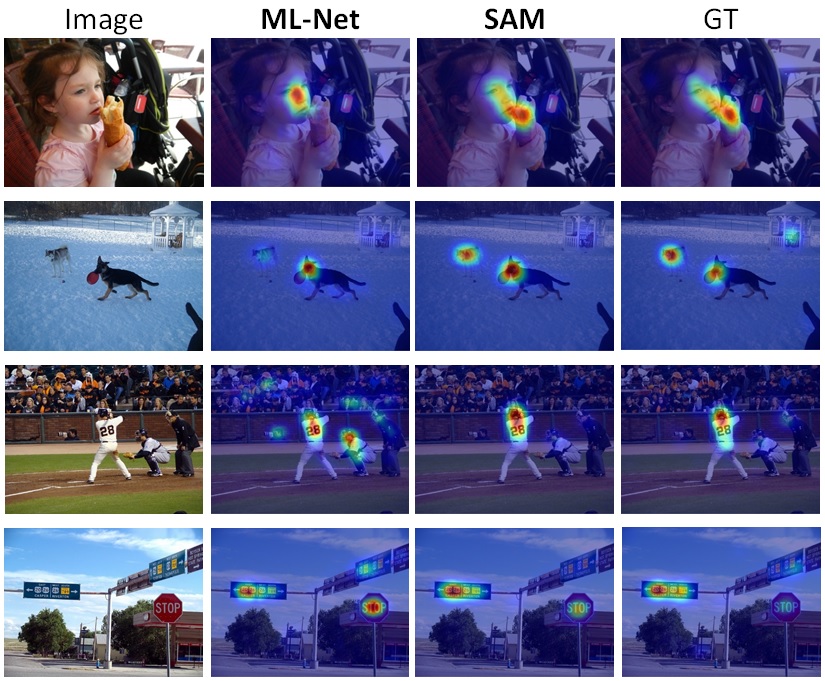

Data-driven saliency has recently gained a lot of attention thanks to the use of Convolutional Neural Networks for predicting gaze fixations. In this project we go beyond standard approaches to saliency prediction, in which gaze maps are computed with a feed-forward network, and we present a novel model which can predict accurate saliency maps by incorporating neural attentive mechanisms. The core of our solution is a Convolutional LSTM that focuses on the most salient regions of the input image to iteratively refine the predicted saliency map. Additionally, to tackle the center bias present in human eye fixations, our model can learn a set of prior maps generated with Gaussian functions. We show, through an extensive evaluation, that the proposed architecture overcomes the current state of the art on two public saliency prediction datasets. We further study the contribution of each key components to demonstrate their robustness on different scenarios.

LSUN Challenge 2017

The latest saliency prediction model developed by us has ranked first in the LSUN 2017 Saliency Challenge, which took place at CVPR, Honolulu, Hawaii.

Our model integrates an LSTM-based attentive mechanism to iteratively attend and refine predictions at different locations. A variation of that model has been accepted for publication to IEEE Transactions on Image Processing. You can download the paper here.

Publications

- Marcella Cornia, Lorenzo Baraldi, Giuseppe Serra, Rita Cucchiara. Paying More Attention to Saliency: Image Captioning with Saliency and Context Attention. ACM TRANSACTIONS ON MULTIMEDIA COMPUTING, COMMUNICATIONS AND APPLICATIONS, vol. 14, pp. 1-21, 2018. [pdf] [DOI]

- Marcella Cornia, Lorenzo Baraldi, Giuseppe Serra, Rita Cucchiara. SAM: Pushing the Limits of Saliency Prediction Models. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, pp. 1890-1892, June 18-22, 2018. [pdf] [DOI]

- Marcella Cornia, Lorenzo Baraldi, Giuseppe Serra, Rita Cucchiara. Predicting Human Eye Fixations via an LSTM-based Saliency Attentive Model. IEEE TRANSACTIONS ON IMAGE PROCESSING, vol. 27, pp. 5142-5154, 2018. [pdf] [DOI]

- Marcella Cornia, Lorenzo Baraldi, Giuseppe Serra, Rita Cucchiara. Visual Saliency for Image Captioning in New Multimedia Services. Multimedia & Expo Workshops (ICMEW), 2017 IEEE International Conference on, Hong Kong, pp. 309-314, July 10-14, 2017. [pdf] [DOI]

- Marcella Cornia, Lorenzo Baraldi, Giuseppe Serra, Rita Cucchiara. A Deep Multi-Level Network for Saliency Prediction. Pattern Recognition (ICPR), 2016 23rd International Conference on, Cancun, Mexico, December 4-8, 2016. [pdf] [DOI]

- Marcella Cornia, Lorenzo Baraldi, Giuseppe Serra, Rita Cucchiara. Multi-Level Net: a Visual Saliency Prediction Model. Computer Vision ECCV 2016 Workshops, vol. 9914, Amsterdam, The Netherlands, pp. 302-315, October 9th, 2016. [pdf] [DOI]